1 . Initial interaction

Once you reach out to us - we will send a few questions via email to gather more information about the problem you are trying to solve.

Our battle-tested process makes data extraction as smooth as it can get

Once you reach out to us - we will send a few questions via email to gather more information about the problem you are trying to solve.

Once we have enough details of your project - we will perform a feasibility analysis and design a solution that works best for you.

Our pricing depends on the complexity of the source websites, and the volume of the data being scrapped. Once pricing and terms of engagement are set, we will send you an invoice that is payable by bank transfer (wire transfer) or Paypal. Get Data or your money-back - That's our promise.

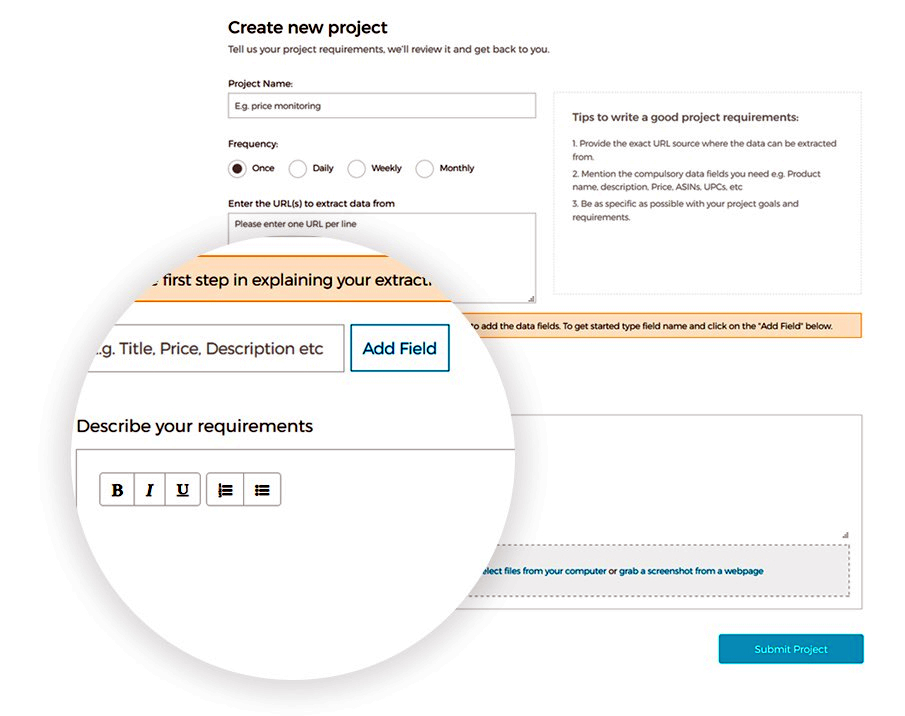

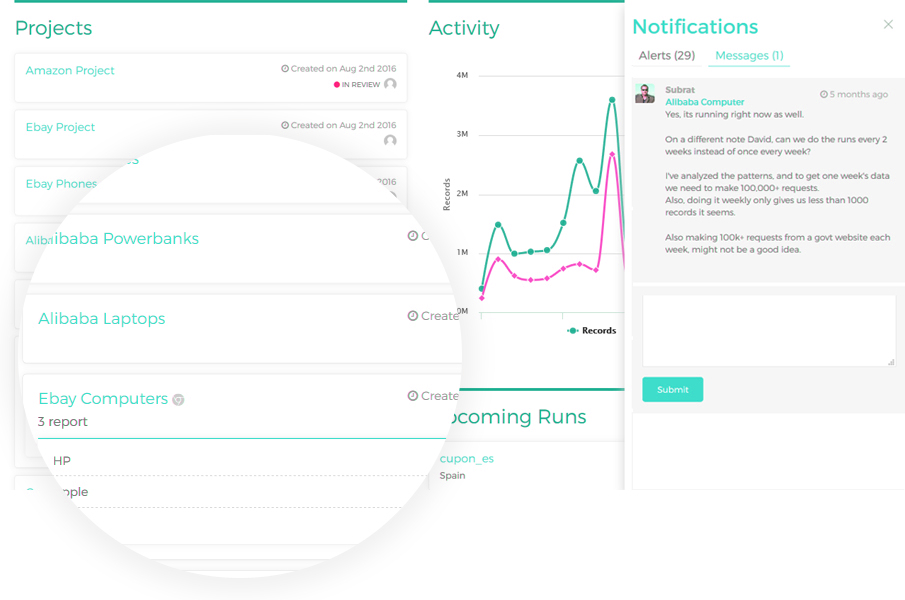

Once the payment is complete - we will create an account for you on our customer support portal. Our porject management tool enables us to deliver a fantastic customer experience. You will be able to directly communicate with our data mining engineers and customer success manager to ensure the success of the project.

If there are any issues found in the sample - the data mining team will fix them. Most common issues are format changes, parsing changes and other small issues. The approval of the sample usually happens on the second or third iteration.

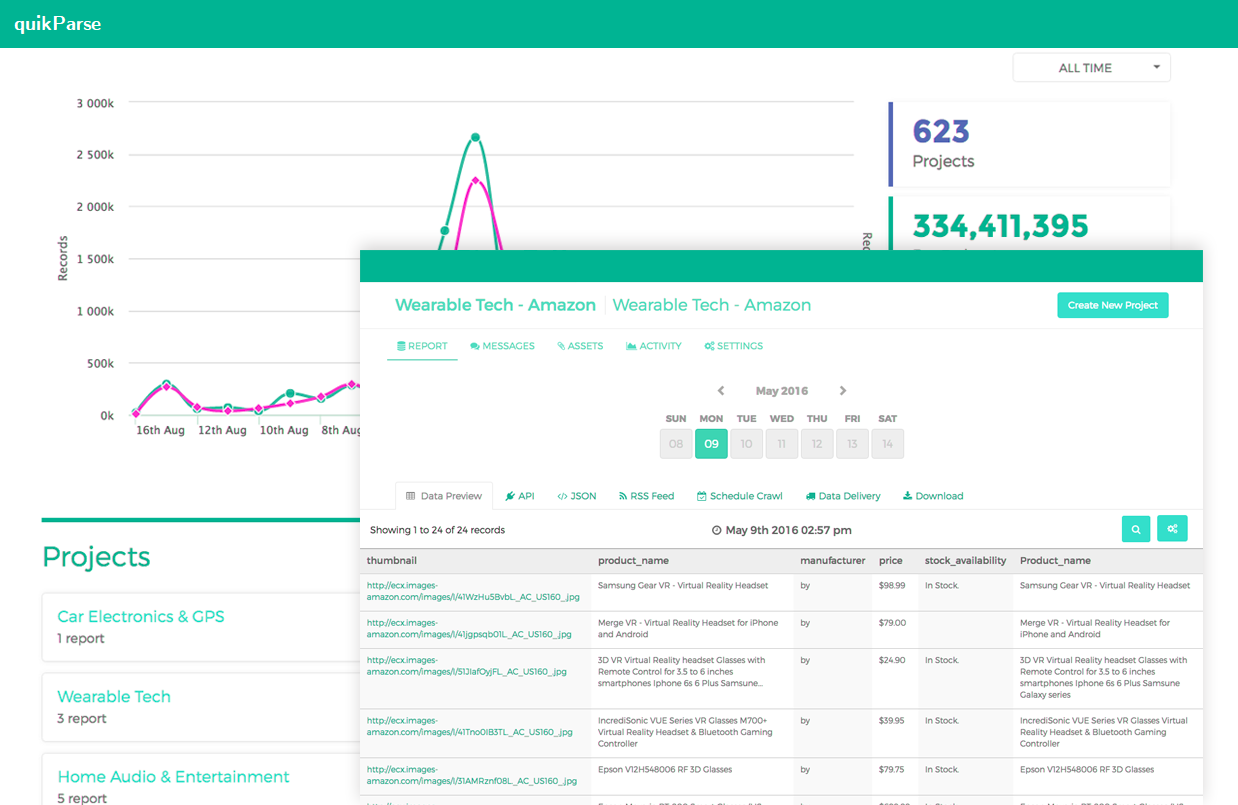

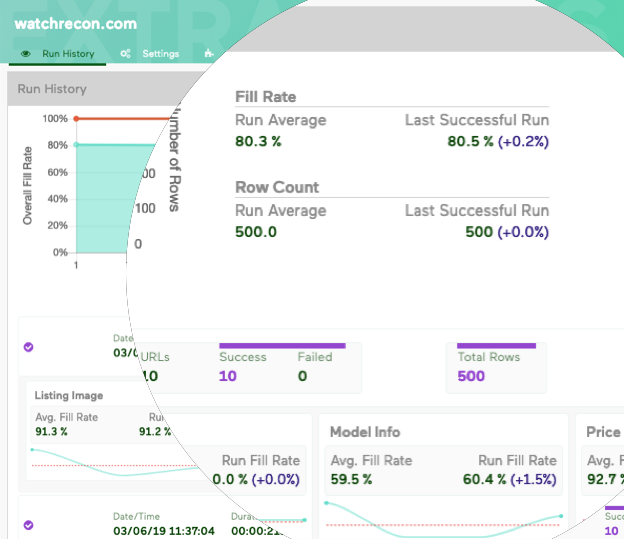

Once you approve the sample data - we will do a full scrape on our distributed data extraction platform. The extracted data will be pushed to our Quality assurance tool.

Our two state Q&A process uses a machine learning based tool and real people to verify the data. The tool first checks for any faulty data in the output. If it is found, it is sent back to the data mining team for correction. If there is no faulty data - the Q&A team will check random records to verify the quality once more.

Once the data passes Quality checks - It will be delivered to you via most common data sharing sources like Amazon S3, Dropbox, Box, FTP upload or even via a custom API.

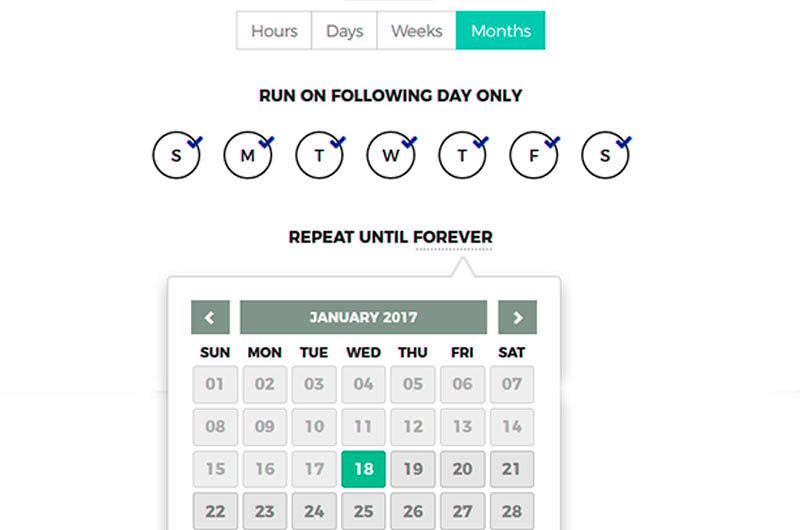

Our customers get free maintenance of their data scrapers as part of their subscription. If you need data on a recurring basis - we can schedule it on our platform and data will be gathered and shared automatically.